Introduction

Bitfount exists to safely unlock the value of sensitive data for the benefit of humankind. We enable data collaborations without needing to transfer data to other parties, an approach known as federated data science.

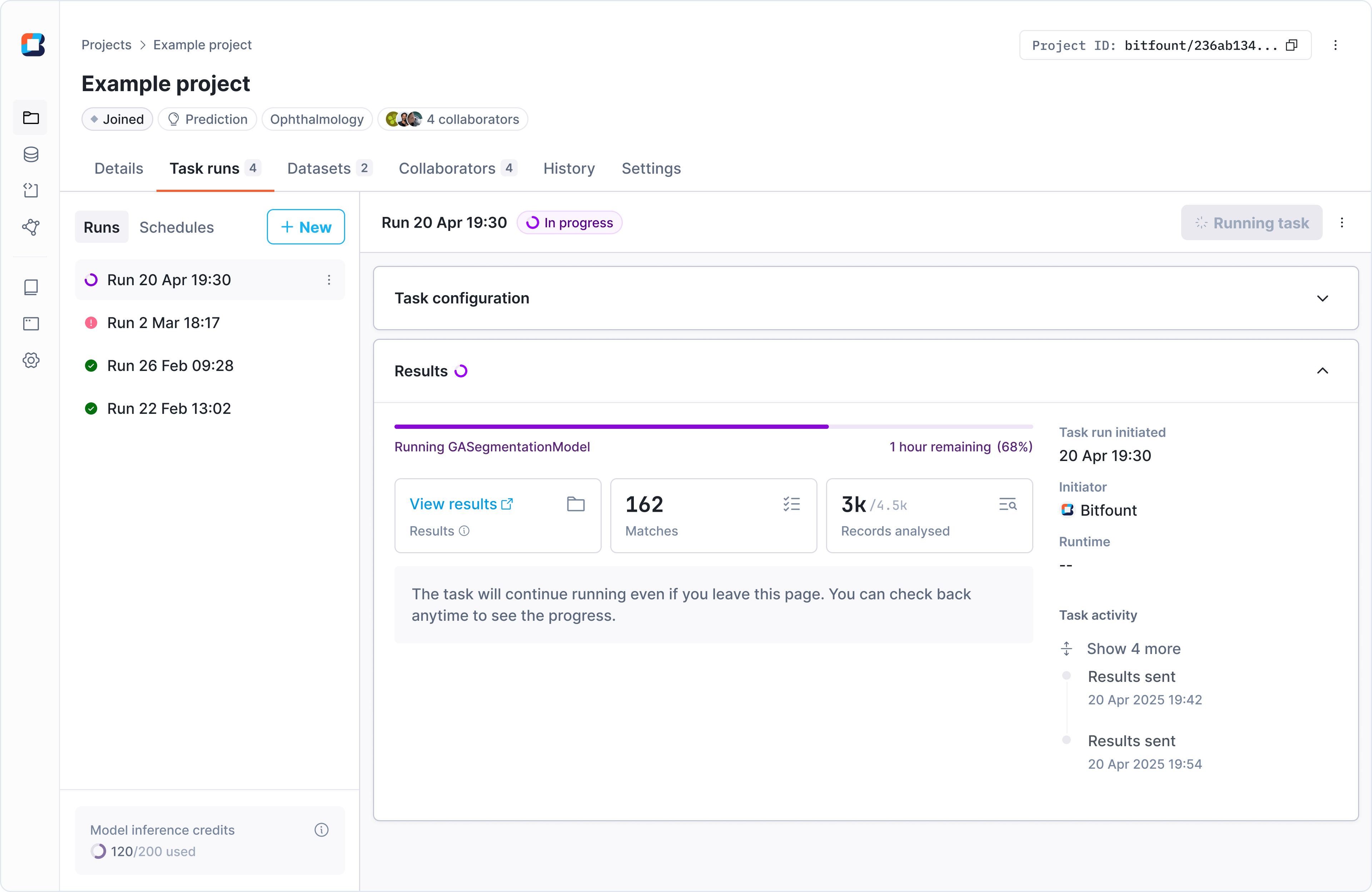

How Bitfount works

With Bitfount, AI models can be securely deployed and run locally in environments like hospitals and clinics, and other sensitive environments. This means:

- Data never leaves its original location.

- Insights are generated without writing a single line of code.

Before exploring federated data science, let's first look at how AI models are typically built and used today.

How AI models work

AI models use data to learn and perform tasks—like detecting diseases in medical images or powering voice assistants. To work well, AI models need lots of training data, which is often:

- Stored in different locations (e.g., across hospitals, banks, or mobile devices).

- Too sensitive to share due to privacy laws and security risks.

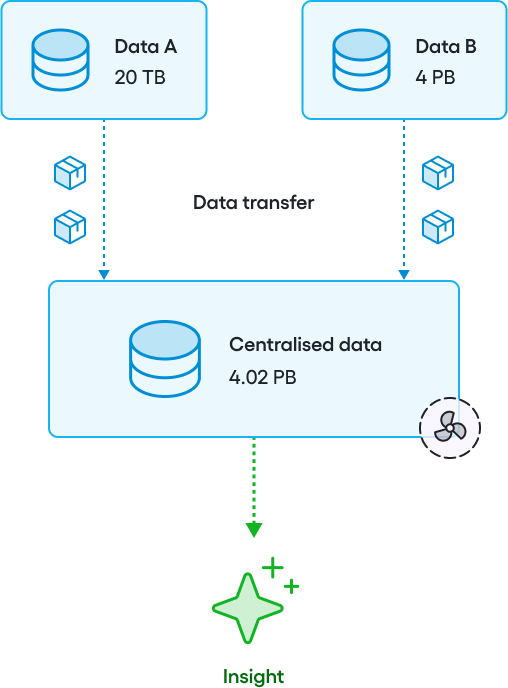

The traditional approach: Data centralisation

The traditional way to solve this problem is data centralisation—bringing all the data together in one place, like a data lake or cloud server.

However, in industries like healthcare and finance, where data is highly sensitive, this approach has serious limitations. Personal information, medical records, or financial details can't simply be shared due to privacy risks, legal restrictions, and strict regulations.

AI has enormous potential to solve big problems—but how can we apply it to sensitive data without compromising security? This is where federated data science comes in.

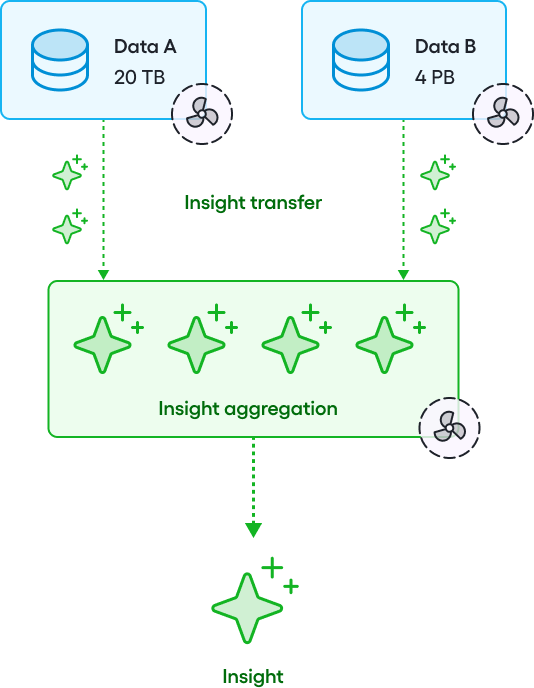

The alternative: Federated data science

Rather than moving data, federated data science sends the AI model to the data.

This enables organisations to:

- Train, and improve AI models on datasets they never actually see—even when the data is spread across multiple locations.

- Ensure compliance with privacy regulations.

- Collaborate securely while keeping full control of their data.

This keeps information safe while still allowing AI to learn and improve. Organisations get the insights they need without sharing private data. Let's look at some real-world applications.

How is federated data science used?

Federated data science is already making AI safer and more effective. Some real-world uses include:

- Healthcare: Training AI to detect diseases without sharing patient records.

- Finance: Banks spotting fraud patterns without exposing customer data.

- Smartphones: Improving voice assistants without collecting users' conversations.

Training AI securely with federated learning

Federated Learning (FL) allows AI models to be trained across multiple locations without sharing raw data. Instead of collecting data in one place, the model learns from distributed datasets stored across different institutions, servers, or devices. Here's how it works:

- Model setup: A global AI model is prepared and sent to multiple locations where data exists.

- Local training: Each site trains the model using its own data but never shares the raw data itself.

- Model updates: Each site sends back only the improvements (updated model parameters), not the data.

- Aggregation: A central system combines all updates to refine the global model. The most common method, Federated Averaging (FedAvg), ensures sites with more data have a greater impact on training.

- Repeat: The improved model is sent back for further training, repeating the process until it reaches peak performance.

This approach distributes the computing workload across many locations, making AI training more efficient, scalable, and privacy-preserving.

What can you do with Bitfount?

You can use Bitfount to securely adopt AI in a range of scenarios, including:

- Model inference: Get predictions on your data and run a wide selection of models locally. All data remains behind your firewall with results only accessible to you.

- Fine-tune models: More efficient than training a model from scratch. Adapt foundation models on your sensitive data to complete specific downstream tasks you are interested in.

- Federated learning: The traditional application of federated data science. Federated learning enables you to train models across multiple distributed datasets.

- Federated evaluation: Test model performance on variety of real-world data you don't have access to in raw form.

- Private set intersection: Determine the overlapping records in two (or more) disparate datasets without providing access to the underlying raw data of either dataset to any other collaborators.

- Private analytics: Run analysis queries over and retrieve back valuable insights. Data custodians remain in control of what kind of metrics can be retrieved.

If you would like to learn more about what you could achieve with Bitfount, please contact us at support@bitfount.com.